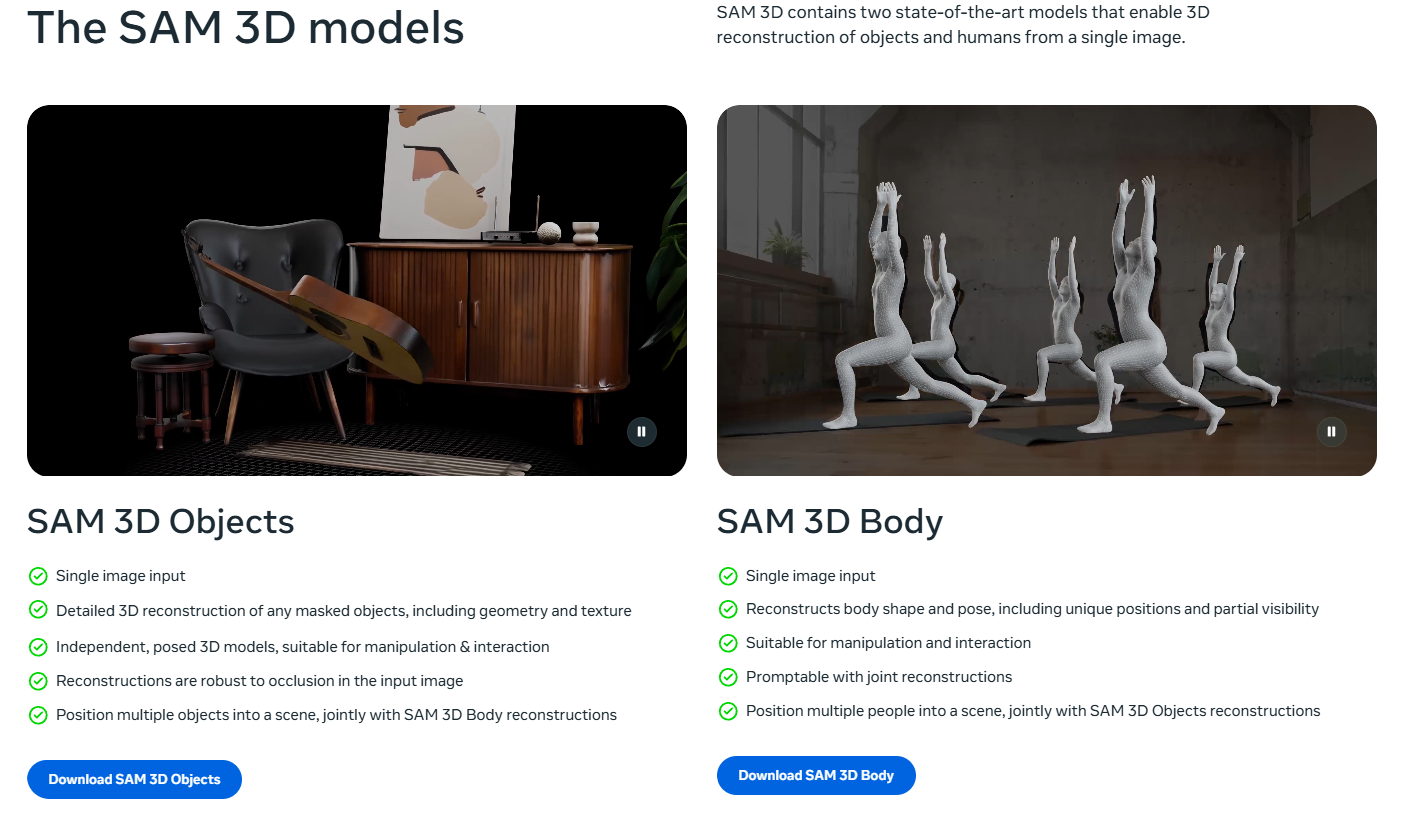

Meta has launched SAM 3D Objects, a foundation model that reconstructs complete 3D geometry, texture, and spatial layout from a single photograph, marking a significant advancement in computer vision technology. The model, announced on November 19, 2025, is part of the broader SAM 3D release and is designed to handle real-world scenarios involving occlusion, clutter, and challenging visual conditions.

Foundation Model Performance

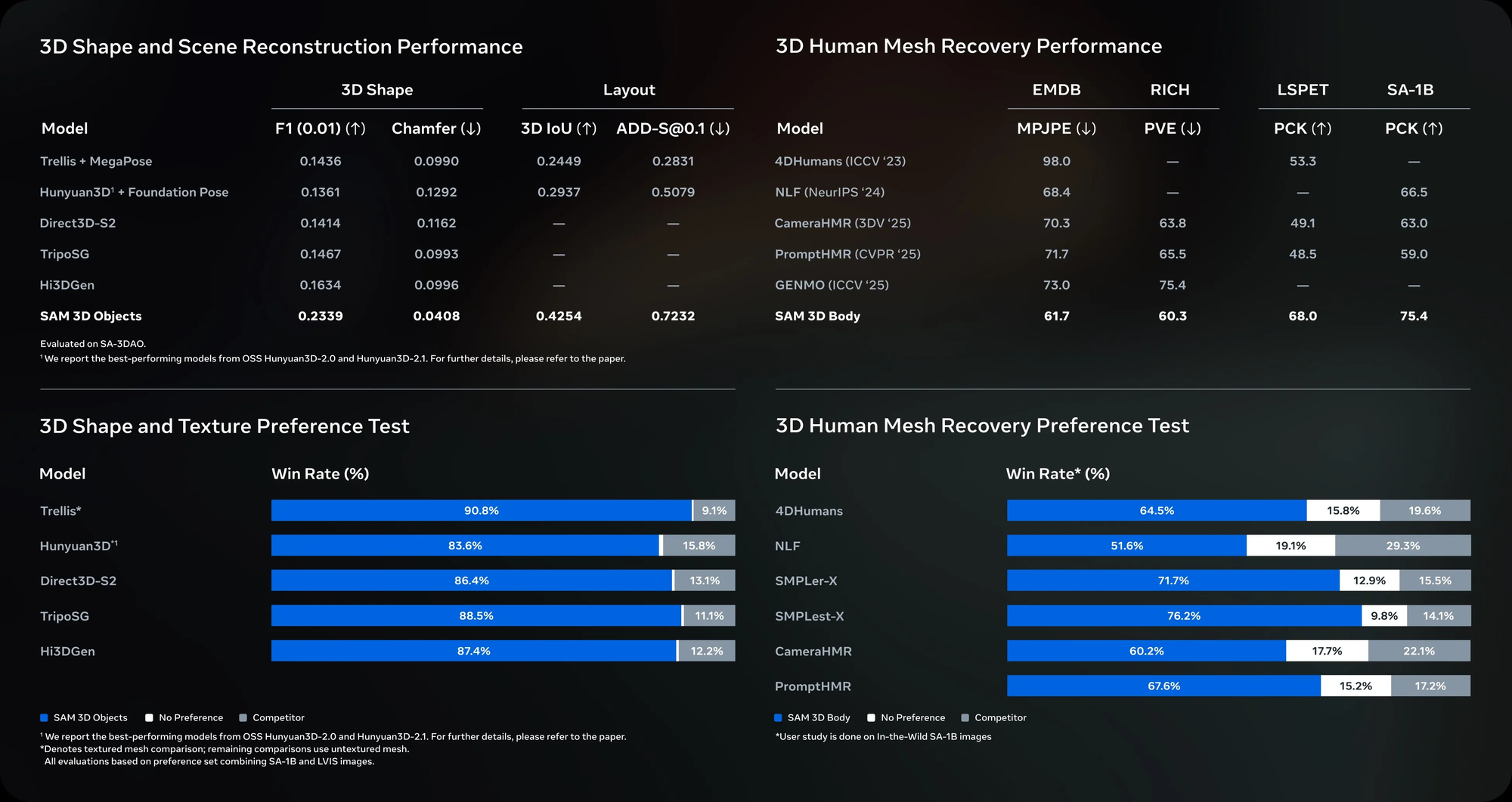

SAM 3D Objects represents what Meta describes as "a new approach to visual localization 3D reconstruction and object pose estimation," capable of generating detailed 3D shapes and textures from natural images. In head-to-head human preference tests, the model achieved a win rate of at least 5 to 1 compared to other leading 3D reconstruction models, demonstrating substantial performance advantages over existing methods.

The model processes reconstructions in seconds through diffusion shortcuts and engineering optimizations, enabling near-real-time 3D applications such as robotics perception modules. This processing speed makes the technology practical for commercial deployment across multiple industries including augmented reality, gaming, and e-commerce.

Technical Architecture

SAM 3D Objects employs a progressive training approach combined with a data annotation engine that incorporates human feedback. The training pipeline annotated nearly 1 million different images, generating approximately 3.14 million mesh models during development. This data engine methodology allows the system to handle small objects, unusual poses, and difficult situations encountered in uncurated natural scenes.

The model's architecture predicts geometry, texture, and layout simultaneously from masked objects in an image, representing what Meta researchers characterize as breaking through "long-standing barriers in 3D data of the physical world". The system demonstrates particular robustness in scenarios where objects are partially occluded or positioned in cluttered environments.

Commercial Applications

Meta has already integrated SAM 3D Objects into Facebook Marketplace through a "View in Room" feature, allowing shoppers to visualize furniture and other products in their homes before purchasing. This practical application demonstrates the model's capability to generate accurate spatial representations suitable for consumer-facing applications.

The technology has potential applications across robotics, virtual reality, augmented reality, gaming, and digital design industries. Meta has positioned the model as a tool for developers and businesses rather than requiring specialized computer vision expertise.

Research Contributions

Meta developed SAM 3D Artist Objects, described as "a first-of-its-kind evaluation dataset" specifically designed to challenge existing 3D reconstruction methods and establish new benchmarks for measuring research progress in three-dimensional modeling. The company collaborated with artists to build this dataset, introducing what it characterizes as "a new standard for measuring research progress in 3D".

The model can reconstruct everyday objects including furniture, tools, gadgets, and complete indoor scenes, predicting depth, shape, and structure from single-image inputs. This capability extends beyond isolated object reconstruction to full scene understanding and spatial layout prediction.

How to Access and Use SAM 3D Objects

Meta has made SAM 3D Objects available through multiple access channels for developers, researchers, and creators. The model weights and checkpoints are hosted on Hugging Face, providing standardized access for machine learning practitioners. Users can access the official repository at facebook/sam-3d-objects on the Hugging Face platform for model downloads and documentation.

For immediate experimentation without setup requirements, Meta offers a web-based demo at aidemos.meta.com/segment-anything/editor/convert-image-to-3d where users can upload images and generate 3D reconstructions directly in their browser. This interactive playground allows creators to test the technology's capabilities on their own images without technical infrastructure.

Developers who want to implement SAM 3D Objects in their own applications can access the complete source code on GitHub at facebookresearch/sam-3d-objects. The repository includes installation instructions, inference code, and Jupyter notebooks demonstrating both single-object and multi-object 3D reconstruction workflows. A quick-start Python script is available that loads the model, processes an image with a mask, and exports the resulting 3D object as a Gaussian splat file.

The repository provides two detailed tutorials through Jupyter notebooks: one focused on single object reconstruction and another demonstrating multi-object scene reconstruction. For users working with human subjects, Meta also offers guidance on combining SAM 3D Objects with SAM 3D Body to align both models in the same reference frame.

All model checkpoints, inference code, and research materials are released under the SAM License, allowing broad usage while maintaining Meta's licensing terms. The GitHub repository includes comprehensive documentation covering setup procedures, dependencies, and implementation examples to facilitate integration into existing computer vision pipelines.

Open Source Release

Meta has released SAM 3D Objects as an open-source project, sharing model checkpoints and inference code publicly. The complete codebase is available on GitHub at facebookresearch/sam-3d-objects, including setup instructions, demonstration scripts, and Jupyter notebooks for both single-object and multi-object reconstruction. This open-source approach represents Meta's strategy to accelerate adoption and establish the technology as a standard platform for AI-powered 3D reconstruction tools.

The SAM 3D Objects model checkpoints and code are licensed under the SAM License, with contributions governed by Meta's standard code of conduct and contribution guidelines. Model weights are also available on Hugging Face under the facebook/sam-3d-objects repository for standardized machine learning access.

Sources

https://ai.meta.com/blog/sam-3d/

https://github.com/facebookresearch/sam-3d-objects

https://ai.meta.com/sam3d/

https://about.fb.com/news/2025/11/new-sam-models-detect-objects-create-3d-reconstructions/

https://www.youtube.com/watch?v=B7PZuM55ayc

https://huggingface.co/facebook/sam-3d-objects

https://www.aidemos.meta.com/segment-anything/editor/convert-image-to-3d